ARCH: The NextGen IaC Generator

A Journey from Infrastructure Chaos to Organized Cloud Management

TL;DR: At Transmit Security, we developed ARCH (Automated Reusable Configuration Hierarchy), a comprehensive IaC management system that transforms how we handle infrastructure code across multiple products, environments, and cloud providers. By using a hierarchical YAML structure with templates to generate HCL files, we've reduced setup time by 85%, eliminated configuration drift, and enabled faster team mobility. This article details our journey, implementation, and the real-world impact of standardizing our infrastructure approach.

Let me tell you about the time we almost lost our minds trying to keep track of our cloud infrastructure at Transmit Security.

It started innocently enough. We had one product, a couple of environments, some AWS accounts, and a bit of GCP thrown in. Fast forward five years, and we were drowning in a sea of IaC configurations spread across dozens of product repositories with no clear standards.

"Which module version are we using in production again?" became a daily question. "Why does the staging environment have a different VPC setup than prod?" was another favorite. And my personal nightmare: "Why does this service have different permissions across different environments?"

Sound familiar? I thought so.

After having tons of conflicts that made us create new Cloud resources in several environments and import them to our state, we knew something had to give. That's when we embarked on building ARCH, what I now consider our secret weapon.

Our Automated Reusable Configuration Hierarchy system, a comprehensive Infrastructure as Code management system that has completely transformed how we handle our Infrastructure code.

As a fast growing company with lots of products and environments, working on all major clouds, we needed a better solution for managing our infrastructure and scaling fast. Our identity and security solutions were expanding rapidly across AWS, GCP, and Microsoft Azure, and the traditional approach to infrastructure management simply couldn't keep pace with our growth trajectory. We needed something that would eliminate bottlenecks, standardize deployments, and give our teams the agility to innovate without being bogged down by infrastructure complexities.

If you've worked with infrastructure at scale, you know the pain points:

Before our current system, our per product repositories were filled with different standards, inconsistent naming conventions, and a significant amount of duplicated and unorganized code. Making a simple change across all environments often meant updating dozens of files, with the constant risk of missing something important.

After evaluating various approaches, we designed a system that leverages Terragrunt and Gruntwork's boilerplate to generate and maintain HCL files.

Teams work in their own repos, with ARCH generating the right HCL structure behind the scenes.

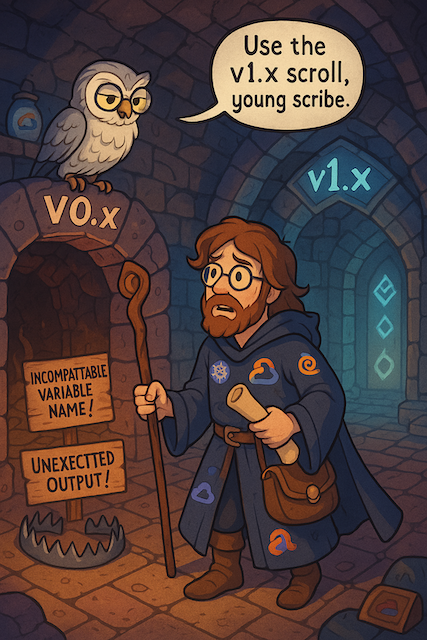

Terraform modules evolve over time — sometimes with breaking changes that require different inputs, outputs, or structural definitions. To handle this without cluttering our logic or forcing "if-else" chaos in templates, we introduced version-aware HCL templates.

We structure our templates directory based on the major version of the Terraform module. Each versioned folder contains a complete, valid HCL template that maps to the expected structure of that module version.

ARCH picks the right template automatically based on the module version in your YAML.

Our YAML spec (the desired state) includes the desired module version (e.g., v1.2.3). During the rendering phase, the GitHub Action:

This approach allows us to support multiple versions of the same module in parallel — critical when different environments or products are on different upgrade cycles.

The magic happens when we combine these components. Here's the flow:

This approach means that each service's HCL file contains everything needed for deployment, making the process streamlined and less prone to errors.

Define common settings once, override when needed.

Take a look at this folders tree:

This hierarchy allows us to make broad changes at higher levels while maintaining the flexibility to customize at more specific levels.

Our pre-built templates ensure that all services are defined consistently, regardless of which team or engineer set them up. This consistency extends across environments, regions, and cloud providers.

For instance, a PostgreSQL database in development will have exactly the configuration structure it needs and will be as close as it can to the one in production, with only the specific values differing based on environment needs.

Want to provision a new environment or cloud? Just add a few YAML files. The system takes care of the rest in minutes.

This scalability has been particularly valuable as we've expanded to new regions and added new products, allowing us to maintain a consistent infrastructure approach throughout our growth.

With automation handling the complex parts of infrastructure generation, we've seen a significant decrease in deployment errors. The system ensures that all dependencies are properly defined and that all required values are present before deployment.

Need to whitelist new IPs of a vendor? Add them once, and they propagate across all products.

To further reduce the risk of configuration drift and ensure our infrastructure remains up to date with our desired state, we implemented a periodic automation that executes the same infrastructure generation pipeline (generate-infrastructure action) for all relevant environments and opens a Pull Request only if there are changes.

Let's take a BigTable template for example, its template will look like that:

When merging the yaml files in the hierarchy for product named "product", in our "prod" environment "main" project in "gcp" cloud, located in "us-east1" region and getting file containing the following:

The generated HCL file will look like this:

In all other environments that will use BigTable, the generated HCL will be built with the same standardization, that's how we keep consistency while still preserving the flexibility of every environment to set its needed configuration.

We tested ARCH by migrating an old environment and creating a new one.

Results:

When a critical vulnerability was announced in one of our cloud providers recently, we were able to patch all affected resources across our entire organization in under a day. Before this system? That would have been weeks of work and plenty of missed instances.

Building this system wasn't without challenges. Here are some key lessons we've learned:

We quickly learned that the single most important practice is our "define once" philosophy. Every value belongs at exactly one level in the hierarchy — the highest level where it makes sense.

This approach means that when we need to make a change that affects multiple services or environments, we make it in one place. Next month, we'll need to update our logging configuration across all products. One change to globals.yaml, one PR, and it will automatically be propagated everywhere. The old way? Probably 10+ PRs, change priority and context of members in every team and days of work.

I won't pretend implementing this system was easy. We spent weeks designing the hierarchy, creating the initial templates, and building the automation. There were heated debates about where certain values belonged. And yes, there was pushback from teams comfortable with their existing approaches.

If you're struggling with similar challenges, I'd encourage you to consider a hierarchical approach like ours. Start small, perhaps with just one product or environment, and gradually expand. Focus on building good templates and automation from the beginning. And remember that the goal isn't just consistency for consistency's sake — it's about enabling your teams to move faster with confidence.

The cloud infrastructure world isn't getting any simpler. But with the right systems in place, it doesn't have to be chaotic.